Multiple Choice: A Powerful and Versatile Workhorse

Mike Dickinson, MA, MBA

Introduction

This post addresses a few ways to get more benefit from multiple choice (M/C) questions for both you and your students. This is a brief overview.

This post addresses these four points:

- A common framework for developing multiple choice items (M/C).

- Tips and traps to help you write better questions.

- How to analyze results: Item analysis

- Introduction to formative evaluation

M/C Questions Observe Behavioral Learning Objectives

The purpose of multiple choice questions, as with all questions used on tests, is to determine if the student has mastered a given objective. Ideally, it’s a behavioral learning objective. That is, it can be observed – and thus, evaluated.

- Example: Until we knew better, a lot of us wrote learning objectives like this: Know how to do division. But “know” might mean different things to different people. A more precise verb would be calculate; calculate 120 divided by 30. This elicits specific, observable behavior. You could have the student solve several such problems, enough to infer that they know how to divide.

The “Distractor Answer” is a Powerful Tool Unique to M/C Questions

Unlike short answer or fill-in questions, notice that the answer to a M/C question is right there in plain sight, either on paper or on a screen. Thus, the fundamental behavior in a M/C question is to select the correct answer. Select from what? From two or three distractors. We’ll see more about distractors soon. But before we get into specifics, keep this in mind: The challenge in crafting good M/C questions is for knowledgeable students to not only identify the correct choice, but to know the other choices are incorrect. And, to use distractors that are plausible to those students who have superficial or incomplete knowledge of the subject. One way to do this is to identify “anticipated wrong answers” – points that are common misunderstandings.

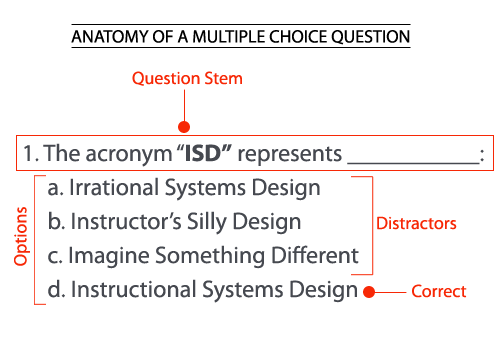

A common M/C framework

Here is a common way to craft an M/C item.

- Stem: The stem should be unambiguous and should contain the question.

- Example: In the Northern Hemisphere, in which season does the sun rise earliest in the morning?

- Choices: Use 1 correct[1]and 3 to 5 incorrect choices, called distractors.

- Example: Summer (Correct answer)

- Simple Distractors:

- Winter

- Spring

- Fall

Making More Challenging Questions

What about making M/C questions harder? The question above is admittedly rather simple. But that’s okay, say, if you want to verify that all or most of the class understands the basic concept. Guard against making questions artificially complex, say, by making the navigation trickier or by throwing in some conditional statements. Rather than do that, identify some higher levels of learning.

All M/C questions must be valid (measure what they purport to measure), and reliable (be consistent over time). They must also be written so the correct answer is clear and unambiguous and yet the distractors seem plausible. Sound challenging? I won’t kid you. Crafting good M/C questions can be a bit time consuming. But once that up-front investment has been made, M/C questions can be workhorses in both your summative, formative, and rapid feedback toolboxes for a long time.

How to write a good Multiple Choice question

Naturally there is more than one way to write multiple choice test items. Here’s one that has been well established.

First, write a clear Stem

The stem, which should include the actual question. Asking the question in the stem has two important purposes. 1) To orient the student’s thinking so she can focus her attention, vs. putting it at the very end where she wonders “Where is this going?” and 2), to help ensure a single question is being asked.

Example of a clear construction: Here’s an example with the question in the stem. Note how it cues the student as to what the question is about.

Stem: Whose profile is on the U.S. Penny?

Choices:

- Washington

- Adams

- Lincoln

- Franklin

Example of a less clear construction: Here’s an example of what to avoid: putting the question(s) in the answer choices, not the stem. First, notice how one’s thinking must be all over the place: the Revolutionary war, Lincoln’s age, and coins.

Stem: Did Abe Lincoln…

Choices:

- …Argue in favor of the Revolutionary War?

- …Live to be 84 years old?

- …Have his profile depicted on the U.S. penny?

- …Have his profile depicted on the U.S. quarter?

Next, create answer choices with distractors:

(Assuming 4 choices):

– First, write the question and the correct answer.

– Then identify the distractors:

- One should be nearly correct, but be wrong for a specific, tangible reason.

- One could be a common misperception or just plain incorrect.

- One could sound plausible but be clearly wrong to someone who knows the material.

Example:

Stem: What is the value of this mathematical expression: 33?

Distractors:

- 6

- 9

- 27 (correct answer)

- 33

Comments:

- Both A and B are anticipated wrong answers. That is, they are not just random numbers but, rather, they are plausible numbers to someone who doesn’t know how to calculate cubed numbers.

- Choice D is less plausible, but still relevant.

- Note that we are not trying to “trick” the student. The question is straightforward and unambiguous. But we do have some distractors that a less-than-fully-knowledgeable student might select, and this could point to remedial coaching or faulty instruction. (See Analysis101)

Tips and Traps

This is not meant to be a complete treatise on writing multiple choice question items. Rather, it just points out a few of the more impactful tips and traps.

- Don’t restrict M/C questions to just the lower levels of learning, e.g., recall or select. Although often overlooked, M/C items can work reasonably well for higher levels of learning such as applying principles and analyzing. What they cannot be used for are things like creating (as in a short story) or evaluating, as in judging the worthiness of M/C items(!).

- Make sure each test item matches its corresponding learning objective, especially the verb. Example: If the learning objective is to write something, don’t have the test item ask for editing. (We’ll come back to this point from a different angle later.) But you could have a M/C question where the student selects the correct edit. The student would still not be creating something from their head; since the correct answer would be on paper (or screen), ready to be selected.

- Avoid contrived attempts to make an item humorous or artificially difficult.

- Don’t slice it too thin. If you have two choices that are very close in meaning, students who know the material really well may see it from both angles.

- If your question starts off with something like “Which of the following statements is true?” then you may be creating a list of T/F statements, not a M/C item, and a lot of extraneous reading for the student.

- Sometimes teachers feel a M/C question is too simple so they make the navigation harder, not the content itself, e.g., A, B, C (A and B), D (A and C), E (all the above). But if the facts in a question really are easy, why ask it at all? Instead, ask something about the application of those facts (assuming that is one of the learning objectives).

- In any case, when crafting your M/C questions, use direct wording and focus on a single concept per question.

How to analyze results (of the test, not the student)

If at all possible, find some students to pilot test your test. They should be from a similar population as your students, maybe students who took the same course last year. If no students, ask a fellow teacher to look it over for inadvertent hints, grammar and spelling, and content accuracy.

How about some fun? How is your testmanship? The questions in the following quiz each have a correct answer, along with cues from which you can deduce the correct choice. See how you do.

- The main danger when working with flame pots is

- Moisture

- Drowsiness

- Burns

- Cuts

- The most appropriate thing to carry in the winter is an

- Raincoat

- Boots

- Umbrella

- Scarf

- The superfrazzle works in zero gravity because:

- It only has one stage.

- It’s a gas

- It has more pressure than a plain frazzle, especially if you heat the whole assembly by 10 degrees.

- It has a raster blaster.

- Who won the Nobel Prize in chemistry for 2015?

- Lindahl and Modrich

- Lindahl and Sancar

- All the above

- Only Sancar

- Whose profile is on the U.S. Penny?

- Washington

- Adams

- Jefferson

- None of the above

Correct answers:

- It’s the only one that seems like it could be affected by “flame” pots.

- Parallel vowels and consonants: an–umbrella vs. a–umbrella.

- The longest answer, especially if it contains qualifiers like in this example, is often the correct choice.

- It just sounds like they should all be included, although that’s admittedly not a sure bet.

- This one has an interesting twist. The correct answer isn’t even listed (Lincoln), so the student can get the question right without actually mastering the learning objective.

- Also, did you notice that nearly all the correct answers are “C”? Remember to randomize them as much as feasible.

Back to analyzing results: the Item Analysis Report.

Once you have given your test for real, how did it perform? Note: Our focus here is on the test itself, not the students’ performance.

- Did the test distinguish those students who know the material well from those who don’t?

- Were any items confusing or frustrating to the students?

- Did scores run an acceptable range, or do they seem skewed for some reason?

- Did everyone master the objectives (pass the test)? If not, is there something about the instruction that can be changed?

A lot of times our analysis of test results ends right there. But isn’t there more we’d like to know, if only we could extract the data easily? Questions like:

- Were any questions missed by the majority of students?

- How many students missed each question and which answer choices did they select?

- Were a question’s wrong answers selected fairly randomly, or is there one choice that seems to be a sticking point?

- Were any questions missed only by students who earned a high score? Then look for a subtlety that may be misleading those with good subject knowledge.

- Were any questions missed only by students who earned low scores? Leave these questions alone: This is what we want — questions that discriminate between those who know the material from those who don’t.

These and other questions can be answered by use of an item analysis report. This report summarizes all the responses to each question, plus it sorts students by where they placed: e.g., top 20% of those who took the test, bottom 20%, etc.

Data gleaned from the item analysis report can help you determine if the test items are doing what you intended, namely, helping to determine the “test-worthiness” of each item and giving you insight into any tweaks that may need to be made. See Analysis101 for more complete coverage, but here is a partial overview of an item analysis report and its interpretation.

Fig. X – Sample Item Analysis Report.

Bold underlined number = Correct answer

| Question # | A | B | C | D | Total # answered |

| 1 | 3 | 2 | 6 | 3 | 14 |

| 2 | 12 | 0 | 1 | 1 | 14 |

| 3 | 6 | 1 | 0 | 7 | 14 |

| 4 | 0 | 1 | 5 | 8 | 14 |

| 5 | 4 | 2 | 3 | 5 | 14 |

Comments on each question:

#1: Hard but probably fair question. The majority got it right, even though several missed it.

#2: Most of the class got it right, with a couple stragglers. No need to change the question.

#3: Bi-polar: Answer D is confusing many students. Find out why and probably change it.

#4: Similar to #3, but with slightly higher percentage getting it right.

#5: Looks like a poor question. Only two students got it right, with results scattered.

How do you obtain an item analysis report? Hopefully your institution’s learning platform has built-in capability for item analysis. In my experience, teachers often don’t realize it’s there, and to be honest, some of them don’t care to use it if it is there. But for those who do use it, it can be an invaluable course design aid – and it can grow with the item or test if used in subsequent classes.

Formative Evaluation

Up to now we’ve been talking about tests. When used as end-of-unit or final exams, usually for a score or a grade, these tests are called summative evaluations or summative assessments. They measure each student’s learning at the end of a chapter, module, course, etc. Thus the term summative.

The other major type of evaluation is called formative evaluation; it covers practically everything else. As the name implies, formative evaluation involves the pieces that make up, or form, the whole. Formative evaluation can be used in two major ways. One is to assess the instruction itself. As we alluded on the item analysis test above, some of the instruction may need refining in order to be more valid and reliable.

The other kind of formative evaluation assesses the student’s progress along the way. This can include a wide variety of instructional techniques, from a 1-sentence critique to instructional puzzles to pop-quizzes – anything the teacher might use to get a sense of the students’ understanding allalong the way, not just at the end. Part of this assessment checks the effectiveness of the content delivery itself, and may indicate areas that need increased emphasis or practice, or a different approach.

For example, say you’re developing a new quiz for a course and you would like to dry-run it before you use it with your whole class. You could give some sample questions, or the whole quiz, to a handful of students and get their feedback. Is the test written at about the right level, or is it too hard or too easy? Are any questions hard to understand? Do any of the questions create that often-sought “ah-ha” moment?

Or, say you’re conducting an experiment in class and you detect that most of the students seem to be puzzled. You take a brief side-step and ask them to talk about their confusion, either verbally as a group, or individually in one or two sentences on a piece of paper. Then you can do a brief mid-course correction and clear up the confusion so students aren’t lost as you go forward with the experiment. So formative evaluation works in two broad arenas: during course development, when you want to test a segment to see how students respond, and during the live course itself, when you may need to make some adjustments as you see the need.

[I think at this point we’re brushing up against your products and marketing, so I’ll stop here for now. One thing that would be useful in any case, I think, would be to give some examples of types of formative evaluation.]

[1]In this white paper we are assuming each item has one correct answer. To be addressed in another paper are questions which have multiple partly-true choices where the task is to select the most nearly correctchoice.

About the Author

Mike Dickinson is an instructional designer, author, and former adjunct professor. He was an instructor pilot in three Air Force jet aircraft. Then he became an instructional designer and training manager for over 20 years. Mike introduced the use of online training in Air Force pilot training and in two Fortune 500 companies. He was on a team at a major university that mentored professors in transitioning to online courses. Several university students labeled his hands-on decision-making course as “life changing.” No one fell asleep in that experiential learning class! Mike has developed and delivered classroom and online courses in a variety of topics including Medicare compliance, safety, employment law, job-specific technical training, corporate culture, project management, decision making, and writing. He has an M.A. degree in Instructional Technology and an MBA in Finance. He tutored several students in math. Mike has presented at national training conferences and was a regular contributor to Learning Solutions Magazine for several years. He gets his biggest charge from helping learners of all ages to discover those “ah-ha” moments. Mike and his wife live near San Antonio, Texas. They have two daughters and four grandchildren.